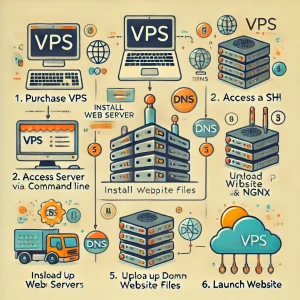

Today, success on a digital platform is no longer a choice but an option for businesses of all sizes. Whether it is a small startup, a relatively large start, or a big enterprise, your website will be your digital storefront - presenting your goods and services to potential customers. Perhaps the most critical decision you'll have to make in establishing or enhancing your online presence is choosing a good web hosting service. Among the many choices available today, one popular choice among businesses in Canada for web hosting is Linux. It is known to be highly reliable, secure, and cost-effective on your part and therefore a solid foundation for your website. In this blog, we're going to explore the top reasons to opt for Linux web hosting Canada for your business in Canada, browse into advantages for small businesses with Linux hosting, and throw the light on the best Linux hosting options for Canadian business. We will also deal with reliable Linux hosting services in Canada that can help you boost your website with reliable Linux hosting today. Amongst such a vast and chaotic landscape, one hosting provider truly stands out as being in a class of its own: 4GoodHosting. As we move through the article, we'll be showcasing exactly how 4GoodHosting can fulfill all your business needs and further your online objectives. Why is Linux so popular? The reason Linux is pretty popular is that it's versatile and cost-effective; even many organizations include Linux servers within their infrastructure in different realms like embedded systems, private clouds, and endpoints. Because Linux is an open source, developers have free access to customize their operating systems however they want - or at least, however is not limited to vendors. Advantages of Linux Hosting Linux-based cloud servers allow fully featured and mature websites with the sophisticated use and heavy resource consumption on the cloud infrastructure. By requiring a fully-managed and dedicated Linux server, it ensures its customers stable performance with dedicated hardware resources for their cloud-based application. Even though normally significant prices of dedicated Linux hosting plans de-bilitate some customers, choosing Linux-based dedicated cloud server hosting allows organizations to cut costs and focus...