It’s been said that you can never predict what the future hold, and in the same way you also can’t predict what the future will hold for you. And perhaps we can also agree that you’re going to be equally uncertain as to what the future will demand of you. It’s likely that there’s no better sphere for this reality to be put on display than the digital one, and one corner of that world where it’s ever so true it with applications.

It’s been said that you can never predict what the future hold, and in the same way you also can’t predict what the future will hold for you. And perhaps we can also agree that you’re going to be equally uncertain as to what the future will demand of you. It’s likely that there’s no better sphere for this reality to be put on display than the digital one, and one corner of that world where it’s ever so true it with applications.

Some apps make quite a splash upon introduction, while others sink like a stone. The majority of them fall somewhere in between, but for those that splash it’s not uncommon to see the demand for your app exceed even your expectations. That’s the rosy part of it all, and the app developer will be just basking in their success and relishing the demand.

When the demand is more related to what the app’s users - particularly if they’re paying ones - expect of it, however, that’s when the receptiveness is often mixed with some degree of ‘how am I going to go accommodate these demands?’

Now here at 4GoodHosting we’re well established as quality Canadian web hosting provider, but we’re the furthest thing from web app developers and that’s probably a good thing considering our expertise is in what we do and a developer’s expertise is in what they do. That’s as it should be, but one thing we do know is that everyone is going to be all ears when it comes to learning what they can do better to be better at what they do.

Which leads us to today’s topic - what are the best ways for scaling apps rapidly when a developer simply doesn’t have the time he or she’d like to accommodate demand that expects expanded capabilities now.

We’ll preface here by saying we’ve taken this entirely from SMES (subject matter experts if you’re not familiar with the acronym) who are on top of everything related to the world of app development, but we can say it checks out as legitimately good information.

Pandemic Spiking Demand

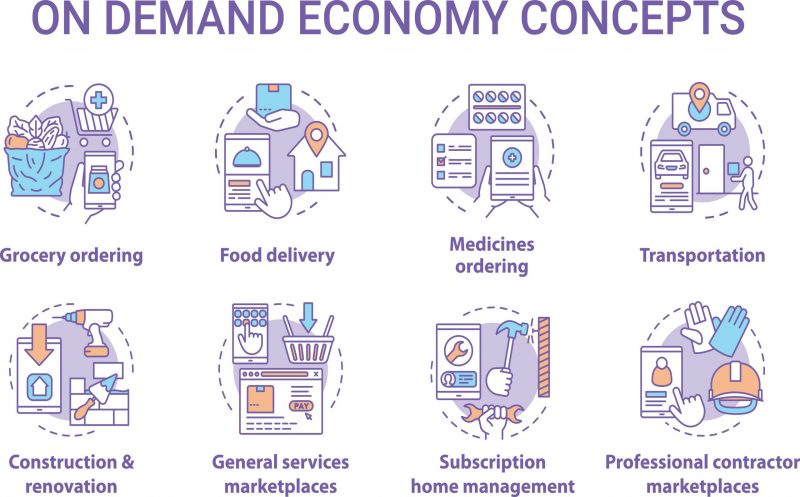

The COVID-19 pandemic continues on, and many companies in e-commerce, logistics, online learning, food delivery, online business collaboration, and other sectors are seeing big time spikes in demand for their products and services. Many of these companies are seeing evolving usage patterns caused by shelter-in-place and lockdown orders creating surges in business and specifically in demand for their products.

These surges have pushed many an application to its limits, and what that’s often doing is potentially resulting in frustrating outages and delays for customers. So how do you best and most effectively accommodate application loads?

What’s needed is the best, quickest, and most cost-effective way to increase the performance and scalability of applications as a means of offering a satisfactory customer experience, but not assuming excessive costs in doing so.

Here are 6 of the best ways to do that:

Tip 1: Understand the full challenge

Addressing only part of the problem is almost certainly not going to be sufficient to remedy these new shortcomings. Be sure to consider all of the following.

- Technical issues– Application performance under load (and how end users experience it) is determined by the interplay between latency and concurrency. Latency is the time required for a specific operation, or more simply how long it takes for a website to respond to a user request.

- Concurrency - the number of simultaneous requests a system can handle is its concurrency. When concurrency is not scalable, a significant increase in demand can cause an increase in latency because the system is unable to respond to all requests as quickly as they are received. A poor customer experience is what’s usually the outcome here, as response times increase exponentially and look bad on your app. So while ensuring low latency for a single request may be essential, it may not solve the challenge created by surging concurrency on its own and you need to be aware of this and make the right moves to counter.

It’s imperative that you find a way to scale the number of concurrent users while simultaneously maintaining the required response time. It’s equally true that applications must be able to scale seamlessly across hybrid environments, and often ones that span multiple cloud providers and on-premises servers.

- Timing– Fully grown strategies take years to implement, like when you’re rebuilding an application from scratch, and they aren’t helpful for addressing immediate needs. The solution you adopt should enable you to begin scaling in weeks or months.

- Cost– Budget restrictions are a reality for nearly every team dealing with these issues, so a strategy that minimizes upfront investments and minimizes increased operational costs is going to be immeasurably beneficial and it’s something you need to have in place before you get into the nitty gritty of what you expanded scaling is going to involve.

Tip 2: Planning both short and long term

So even as you’re smack dab in the middle of addressing the challenge of increasing concurrency while keeping latency in check, it’s never a good idea to rush into a short-term fix that may lead to a dead end due to the haste with which it was incorporated. If a complete redesign of the application isn’t planned or feasible, then you should adopt a strategy that will enable the existing infrastructure to scale to whatever extent it’s needed.

Tip 3: Choose the right technology

The proven consensus for the most cost-effective way to rapidly scale up system concurrency is with Open source in-memory computing solutions that can still maintain or even improve latency. Apache Ignite, for example, is a distributed in-memory computing solution which is deployed on a cluster of commodity servers. It consolidates CPUs and RAM of the cluster and distributes data and compute to the individual nodes. Whether deployed on-premises or in a public or private cloud or hybrid environment, Ignite can be deployed as an in-memory data grid (IMDG) stuffed between existing application and data layers and requiring no major modifications to either component. Ignite also supports ANSI-99 SQL and ACID transactions.

Relevant data from the database is cached in the RAM of this newfound cluster when an Apache Ignite in-memory data grid is in place. It is then available for processing that’s free of the delays caused by normal reads and writes to a disk-based data store. The Ignite IMDG uses a MapReduce approach and runs application code on the cluster nodes to execute massively parallel processing (MPP) across the cluster with minimal data movement across the network. Between in-memory data caching, sending computes to the cluster nodes, and MPP dramatically increases concurrency and reduces latency, you get an up to 1000 times increase in application performance compared to applications built on a disk-based database.

By adding new nodes the distributed architecture of Ignite makes it possible to increase the compute power and RAM of the cluster, and it’s also now able to automatically detect the additional nodes and redistributes data across all nodes in the cluster. This means optimal use of the combined CPU and RAM and you also now have massive scalability to support rapid growth.

We only have so much space to work with here, but a Digital Integration Hub (DIH) and Hybrid transactional/analytical processing (HTAP) get honourable mentions here as other really smart choices for scaling up apps. Look into them too.

Tip 4: Open Source Stacks - Consider Them

You need to identify which other proven open-source solutions make the grade for allowing you to create a cost-effective, rapidly scalable infrastructure, and here are 3 of the best:

Apache Kafka or Apache Flink for building real-time data pipelines for delivering data from streaming sources, such as stock quotes or IoT devices, into the Apache Ignite in-memory data grid.

Kubernetes for automating the deployment and management of applications previously containerized in Docker or other container solutions. Putting applications in containers and automating the management of them is becoming a norm for successfully building real-time, end-to-end business processes in our newdistributed, hybrid, multi-cloud world.

Apache Spark for taking large amounts of date and processing and analyzing it efficiently. Spark takes advantage of the Ignite in-memory computing platform to more effectively train machine learning models using the huge amounts of data being ingested via a Kafka or Flink streaming pipeline.

Tip 5: Build, Deploy, and Maintain Correctly

The need to deploy these solutions in an accelerated timeframe is clear, and the consequences of delays being very serious is usually a standard scenario too. For both reason it is necessary to make a realistic assessment of the in-house resources that are available for the project. If you and your team are lacking in either regard then you shouldn’t hesitate to consult with 3rd-party experts. You can easily obtain support for all these open source solutions on a contract basis, making it possible to gain the required expertise without the cost and time required to expand your in-house team.

Tip 6: Keep Learning More

There are plenty of online resources available to help you get up to speed on the potential of these technologies and garner strategies that fit your organization and what is being demanded of you. Start by exploring whether your goal is to ensure an optimal customer experience in the face of surging business activity, or whether it’s to start planning for growth in a (hopefully) coming economic recovery. And determine whether or not either aim is going to involve an open source infrastructure stack powered by in-memory computing being your cost-effective path to combining unprecedented speed with scalability that’s both not limited by constraints and can be rolled out without taxing you and your people too much.