Web hosting cost is an aspect that can either make or mar any digital business. It’s a fast, everyone requires a good service where their website, starting from some simple blog, to the corporate site, can be hosted. But all the same web hosting is a little bit costly in the long run and can cause strains in mattes such as budgeting for the new businesses or start-ups. Fortunately, it is possible to address these cost categories in a number of ways so as to minimize them despite the level of service delivery. Therefore in this article the author will quickly look at ten key strategies that will assist those interested as well as assist in avoiding an increase in overhead costs in connection with web hosting services for your Internet resource. Here at my blog we go behind the scenes to find out how you can improve the quality of your hosting services for your account and keep it cost effective at the same time. And by the way, special thanks as well extend to 4GoodHosting.com – this is a service that does a great job offering the most superior, super cheap hosting plans! What is Web Hosting? An Summary of Hosting Services Imagine you built a house, but nowhere to put it, and no utilities to make it work, so people cannot get to it. That's basically what web hosting is. Web hosting is basically a service that supplies you with storage space (land) and utilities to make your website accessible on the Internet. Companies that provide this service are called web hosts. Essentially, they are renting out space on their servers (powerful computers) for all the files that make up your website: code, images, and text. There are many types of web hosting plans, which depend on the needs of your website. They can be compared in ways of house size and house location. Here's how it works: Storage space: A web hosting company rents you space on a powerful computer called a server. This server holds all the files for your website such as code, images, and videos. Always up and running: The company runs the...

On This Page

- Category: Hosting Related

- Top Budget-Friendly Web Hosting Tips for Maximizing Efficiency and Cutting Costs

- How to Optimize Your Website Performance with Linux Hosting in Canada

- The Ultimate Guide to Choosing the Best Managed WordPress Hosting for Your Website

- VPS Hosting Advantages Over Shared Web Hosting in Canada

- Streamlining Your Packer and Movers Business with Calgary VPS Hosting: A Complete Guide

- Choosing the Best Web Hosting for Law Firms in Vancouver: A Comprehensive Guide

- How to Migrate Your Website to an Edmonton Web Hosting Provider: A Practical Guide

- A Complete 10-Step Guide to Starting a Successful Business in Canada

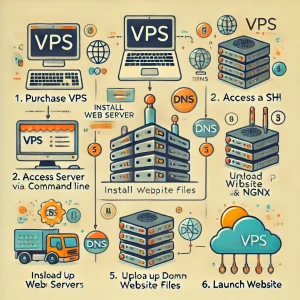

- How to Host a Website on VPS: Step-by-Step Guide for Beginners

- SEO for Photographers in Montreal: Boost Your Online Presence and Attract More Clients